Amazon Redshift: a new data warehouse to the petabytes

Amazon rolled out a fundamentally new service Redshift for database storage size from several hundred gigabytes to many petabytes. The product is aimed at corporate customers, which constrains the limit of 1 terabyte traditional RDS I want to use familiar SQL applications and to ensure immediate availability of data.

The cluster Redshift rises in a few clicks from the administration panel AWS. The cost of data storage here compared to the normal S3 and depends on the type of cluster and data plan. For example, for a three-year plan it is $999 per terabyte per year.

Users Redshift offers two types of servers for cluster: XL and 8XL.

the

the

the

the

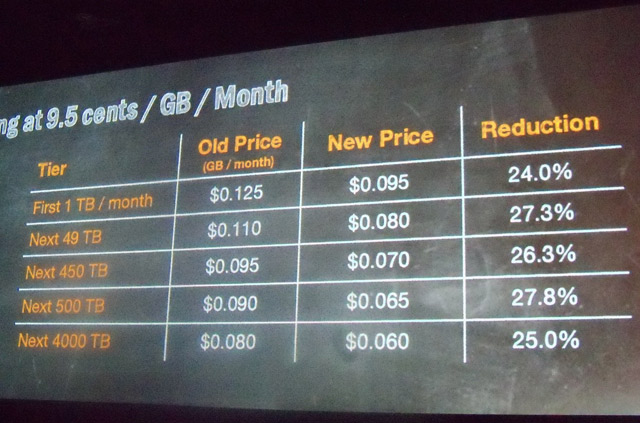

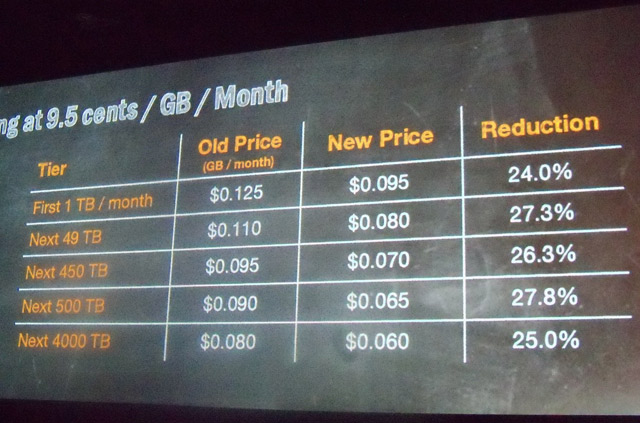

Concurrently with the announcement of Amazon Redshift 25% reduced the price of the hosting S3.

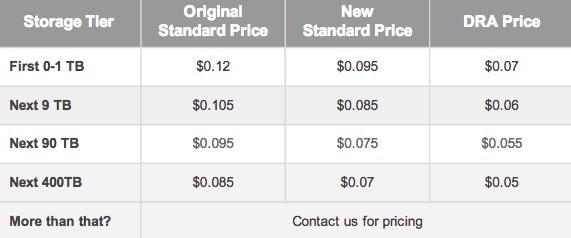

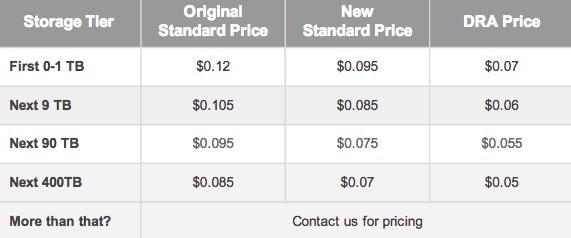

On the same day, Google also reduced prices by 20% storing data in Google Cloud Platform, and announced the launch of a new service for the cheapest long term storage archives Durable Reduced Availability Storage, the type of AWS Glacier.

Article based on information from habrahabr.ru

The cluster Redshift rises in a few clicks from the administration panel AWS. The cost of data storage here compared to the normal S3 and depends on the type of cluster and data plan. For example, for a three-year plan it is $999 per terabyte per year.

Users Redshift offers two types of servers for cluster: XL and 8XL.

the

High Storage Extra Large (XL) DW Node

the

-

the

- CPU: 2 virtual cores the

- ECU: 4.4 the

- Memory: 15 GiB the

- Discs: 3 HDD with 2 TB of local attached storage the

- Network: medium the

- Speed I/O disk: medium the

- API: dw.hs1.xlarge

the

High Storage Eight Extra Large (8XL) DW

the

-

the

- CPU: 16 virtual cores the

- ECU: 35 the

- Memory: 120 GiB the

- Discs: 24 HDD with 16 TB of local space the

- Network: 10 Gigabit Ethernet the

- Speed I/O disk: very high the

- API: dw.hs1.8xlarge

Concurrently with the announcement of Amazon Redshift 25% reduced the price of the hosting S3.

On the same day, Google also reduced prices by 20% storing data in Google Cloud Platform, and announced the launch of a new service for the cheapest long term storage archives Durable Reduced Availability Storage, the type of AWS Glacier.

Comments

Post a Comment