Course of the young fighter PostgreSQL

I want to share with you some useful techniques for working with PostgreSQL (and other DBMS have similar functionality, but can have different syntax).

I will try to cover a variety of topics and techniques that will help when working with data, trying not to go into a detailed description of a functionality. I loved these articles, when studied independently. It's time

This material will be useful to those who fully mastered the basic skills of SQL and want to learn more. I advise you to run and experiment with the examples in the pgAdmin'e, I did all the SQL queries feasible without expanding any of the dumps.

Go!

the

1. The use of temporary tables

When solving complex problems hard to put the decision in one request (although many try to do so). In such cases, it is convenient to put any intermediate data.econtinuing the table to use them in the future.

These tables are created as usual, but with the keyword TEMP, and are automatically deleted after the session.

The key ON COMMIT DROP automatically deletes a table (and all related objects) at the completion of the transaction.

Example:

the

ROLLBACK;

BEGIN;

CREATE TEMP TABLE my_fist_temp_table -- should use a more unique name

ON COMMIT DROP -- drop the table at the end of the transaction

AS

SELECT 1 AS id, CAST ('some value' AS TEXT) AS val;

------------ Additional manipulation of the table: ------------------

-- alter the table by adding a column. I will often touch on related topics

ALTER TABLE my_fist_temp_table

ADD COLUMN is_deleted BOOLEAN NOT NULL DEFAULT FALSE;

- for those who do not know, most often the data in the tables are not deleted, but are marked as deleted with a similar flag

CREATE UNIQUE INDEX ON my_fist_temp_table (lower(val))

WHERE is_deleted = FALSE; -- you could even create an index/constraint, if necessary

-- this index will not allow to insert a duplicate(not case sensitive) for column VAL for non-deleted rows

-- manipulate table data

UPDATE my_fist_temp_table

SET id=id+3;

- to check/use the contents of a table

SELECT * FROM my_fist_temp_table;

--COMMIT;the

2. Commonly used shorthand syntax Postgres

the

-

the

- Convert data types.

Expression:

the

SELECT CAST ('365' AS INT);you can write less cumbersome:

the

SELECT '365'::INT;the

-

the

- Shorthand (I)LIKE '%text%'

LIKE perceives template expression. Details the manual

the operator LIKE can be replaced by ~~ (two tildes)

the operator ILIKE can be replaced by ~~* (two tilde with an asterisk)

Search regular expressions (has non-LIKE syntax)

the

the

Give an example of search in different ways the rows that contain the word text

| Abbreviated syntax | Description | Analog (I)LIKE |

|---|---|---|

| ~ ‘text’ or ~~ ‘%text%’ |

Verifies that the expression is case-sensitive | LIKE '%text%' |

| ~* ‘text’ ~~* ‘%text%’ |

Validates the expression case insensitive | ILIKE '%text%' |

| !~ ‘text’ !~~ ‘%text%’ |

Checks the mismatch expression, case sensitive | NOT LIKE '%text%' |

| !~* ‘text’ !~~* ‘%text%’ |

Checks the discrepancy between the expression case insensitive | NOT ILIKE '%text%' |

the

3. Common table expressions (CTE). Design WITH

Very comfortable design, allows you to put the query result into a temporary table and then use it.

Examples are primitive to capture the essence.

a) a Simple SELECT

the

WITH cte_table_name AS ( -- set we were comfortable with the table name

SELECT schemaname, tablename, - our any request

ORDER BY 1,2

)

SELECT * FROM cte_table_name; -- specify our table

--in fact receive the result of the query in parenthesesThis way you can 'wrap' any requests (even UPDATE, DELETE and INSERT, this will be below) and use their results in the future.

b) you Can create multiple tables, listing them in the following way

the

WITH

table_1 (col,b) AS (SELECT 1,1), -- first table

table_2 (col,c) AS (SELECT 2,2) -- second table

--,table_3 (cool,yah) AS (SELECT 2,2 from table_2) -- recently learned that you can refer to the table above

SELECT * FROM table_1 FULL JOIN table_2 USING (col);c) you Can even put the above design in one (or more) WITH

the

WITH super_with (col,b,c) AS ( /* can set the column names in parentheses after the table name */

WITH

table_1 (col,b) AS (SELECT 1,1),

table_2 (col,c) AS (SELECT 2,2)

SELECT * FROM table_1 FULL JOIN table_2 USING (col)-- specify our table

)

SELECT col, b*20, c*30 FROM super_with;Performance should say that it is not necessary to place in the section WITH data that will largely be filtered subsequent to the external environment (outside the parentheses of the query), because the optimizer will not be able to build an effective query. Convenient to put in CTE the results that you want some time to apply.

the

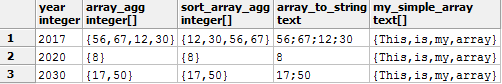

4. Function array_agg(MyColumn).

Values in a relational database is stored separately (the attributes of one object can be represented in multiple rows). To transfer data to any application, you often need to collect data in one row (cell) or array.

In PostgreSQL there is a function array_agg(), she allows to collect in an array the data in the entire column (if the sample from one column).

When you use the GROUP BY in the array gets the data for a column for each group.

Immediately describe another feature and move on to the example.

array_to_string(array[], ';') allows you to convert the array into a string: the first parameter specifies an array, the second we were comfortable with the delimiter in single quotes (apostrophes). As delimiter you can use

special characters

Tab \t — for example, will allow you to insert cells in EXCEL without effort to split the values into columns (you can use it like this: array_to_string(array[], E'\t') )

Line \n — decomposed array values across rows in a single cell (use array_to_string(array[], E'\n') — 'll explain why below)

Line \n — decomposed array values across rows in a single cell (use array_to_string(array[], E'\n') — 'll explain why below)

Example:

the

-- create and fill data table with above described method

WITH my_table (ID, year, any_val) AS (

VALUES (1, 2017,56)

,(2, 2017,67)

,(3, 2017,12)

,(4, 2017,30)

,(5, 2020,8)

,(6, 2030,17)

,(7, 2030,50)

)

SELECT year

,array_agg(any_val) -- collect data (for each year) into an array

,array_agg(any_val ORDER BY any_val) AS sort_array_agg -- the order of the items can be sorted (with 9+ versions of Postgres)

,array_to_string(array_agg(any_val),';') -- convert an array into a string

,ARRAY['This', 'is', 'my' , 'array'] AS my_simple_array is a way of creating an array

FROM my_table

GROUP BY year; -- group data by yearWill give the result:

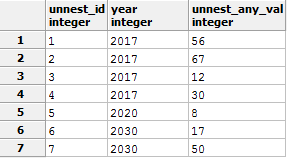

Perform the back action. Decompose the array into a string using the function UNNEST, at the same time demonstrate the design SELECT columns INTO table_name. To put this in a spoiler so that the article is not much swollen.

UNNEST a query

The result:

-- 1 Preparatory phase

- in the process request table will be created tst_unnest_for_del, with the SELECT INTO

-- below query is not generated an error, if you will several times to run this script, will the script delete the table.

-- I also hope that you start this is not your production server any project where there is a table

DROP TABLE IF EXISTS tst_unnest_for_del; /* IF EXISTS will not cause errors if the tables to be deleted does not exist */

WITH my_table (ID, year, any_val) AS (

VALUES (1, 2017,56)

,(2, 2017,67)

,(3, 2017,12)

,(4, 2017,30)

,(5, 2020,8)

,(6, 2030,17)

,(7, 2030,50)

)

SELECT year

,array_agg(id) AS -- collect data arr_id(id) for each year in the mountains

INTO tst_unnest_for_del -- !! way to create and populate the tables from the result

FROM my_table

GROUP BY year;

--2 Demonstration functions Unnest

SELECT unnest(arr_id) unnest_id -- parse the id column

that year

,unnest(arr_any_val) unnest_any_val -- parse the column any_val

FROM tst_unnest_for_del

ORDER BY 1 -- restore the sorting by id, no forced sorting data can be arranged randomlyThe result:

the

5. Keyword RETURNIG *

is specified after the query INSERT, UPDATE or DELETE allows you to see the rows affected by the modification (usually the server reports only the number of modified rows).Convenient in conjunction with BEGIN to see what impact the request, in case of uncertainty in the result or to transmit any id to the next step.

Example:

the

--1

DROP TABLE IF EXISTS for_del_tmp; /* IF EXISTS will not cause errors if the tables to be deleted does not exist */

For_del_tmp -- CREATE TABLE Create table

AS --Fill data generated from the query below

SELECT generate_series(1.1000 level) AS id, -- Generated 1000 numbered rows

random() AS values; -- Fill with random numbers

--2

DELETE FROM for_del_tmp

WHERE id > 500

RETURNING *;

/*Will show all the deleted rows this command

RETURNING * will return all columns of the table test

you can also list the columns in the SELECT (approx. RETURNING id,name)*/Can be used in conjunction with CTE, organize

PS

I am very confuse, fear that came hard, but I tried to comment on.

the

--1

DROP TABLE IF EXISTS for_del_tmp; /* IF EXISTS will not cause errors if the tables to be deleted does not exist */

For_del_tmp -- CREATE TABLE Create table

AS --Fill data generated from the query below

SELECT generate_series(1.1000 level) AS id, -- Generated 1000 numbered rows

((random()*1000)::INTEGER)::text as values; /* Fill with random numbers. PS I Have Postgre 9.2 Random() returns a fractional number less than one, multiply by 1000 to obtain the integer part, then convert to INTEGER to get rid of the fractional part and convert to text, because I want the data type of created column TEXT*/

--2

DELETE FROM for_del_tmp

WHERE id > 500

RETURNING *; -- This query simply deletes the record, returning the deleted rows on the screen

--3

WITH deleted_id (id) AS

(

DELETE FROM for_del_tmp

WHERE id > 25

RETURNING id -- removed another piece of data, recording an id in our CTE "deleted_id"

)

INSERT INTO for_del_tmp -- initiate INSERT

SELECT id, 'Deleted row' || now()::TIME || 'and if to be exact,' | | timeofday()::TIMESTAMP /* here you can see how the time difference returned by functions (depends on the description of the functions will not go deep, and so far)*/

FROM deleted_id -- insert the deleted data from the "for_del_tmp" in it

RETURNING *; -- see what poinsettias

- the whole unit can be run indefinitely, we insert the deleted data in the same table.

--4

SELECT * FROM for_del_tmp; -- check what happened in the endThus, to perform the data deletion, and deleted values will be passed to the next stage. It all depends on your imagination and goals. Before applying intricate designs be sure to check the documentation for your version of DBMS! (with a parallel combination of INSERT, UPDATE or DELETE are the subtleties).

the

6. Saving query result to a file

The team COPY many different settings and assignments, will describe the simplest application for review.

the

COPY (

SELECT * FROM pg_stat_activity /* Our request. For example: system table of running processes of the database */

--) TO 'C:/TEMP/my_proc_tst.csv' -- Write the query result to a file. Example for Windows

) TO '/tmp/my_proc_tst.csv' -- the Record of a query result to a file. Example for LINUX

--) TO STDOUT -- displays the data to the console or log pgAdmin

WITH CSV HEADER -- Optional string. Reports the name of the table columns in filethe

7. Execution of a query on another database

Not so long ago found out that you can address a request to the other base, this is the function dblink (all the details in the manual)

Example:

the

SELECT * FROM dblink(

'SELECT "Remote database:" || current_database()' /* has its own nuances and limitations. As an example, the request is passed in single quotes, so the quotes inside the query should be escaped (in this example, for shielding use two single quotes in a row). */

)

RETURNS (col_name TEXT)

UNION ALL

SELECT 'Current database:' || current_database();

If an error occurs:

"ERROR: function dblink(unknown, unknown) does not exist"you must install the extension with the following command:

the

CREATE EXTENSION dblink;the

8. The similarity function

A function for determining the similarity of one value to another.

Used to map text data that were similar, but not equal to each other (there were typos). Saved a lot of time and nerves, minimizing manual binding.

similarity(a, b) produces a fractional number from 0 to 1, the closer to 1, the more accurate the match.

Let us turn to the example. Using WITH set up a temp table with fictional data (and specifically warped for demonstration functions), and will compare each line of our text. In the example below we look for something more like , OOO "ROMASHKA" (substitute the second parameter of the function).

the

WITH company (id,c_name) AS (

VALUES (1, 'OOO Daisy')

UNION ALL

/* PS UNION ALL is faster than UNION, because there is no forced sorting to eliminate duplicates, which we do not need in this case */

VALUES (2, 'OOO "ROMASHKA"')

UNION ALL

VALUES (3, 'OOO Ramashka')

UNION ALL

VALUES (4, 'JSC "ROMAXA"')

UNION ALL

VALUES (5, 'JSC DAISY')

UNION ALL

VALUES (6, 'OOO RO MASHKA')

UNION ALL

VALUES (7, 'OOO BARNYARD')

UNION ALL

VALUES (8, 'ZAO DAISY')

UNION ALL

VALUES (9, 'How did this get here?')

UNION ALL

VALUES (10, 'Daisy 33')

UNION ALL

VALUES (11, 'SP "Romashkevich"')

UNION ALL

VALUES (12, 'OOO "Roma Skovic"')

UNION ALL

VALUES (13, 'FE "Roma Skovic"')

)

SELECT *, similarity(c_name,', OOO "ROMASHKA"')

,dense_rank() OVER (ORDER BY similarity(c_name,', OOO "ROMASHKA"') DESC)

AS "result Ranking" -- window functions, it is described below

FROM company

WHERE similarity(c_name,', OOO "ROMASHKA"') >0.25 -- values from 0 to 1, the closer to 1, the more accurate the match

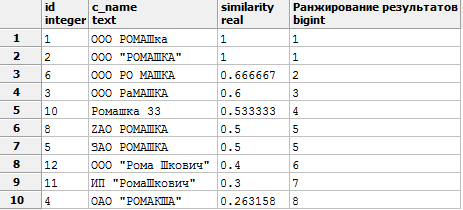

ORDER BY similarity DESC;We get the following result:

If an error occurs

"ERROR: function similarity(unknown, unknown) does not exist"you must install the extension with the following command:

the

CREATE EXTENSION pg_trgm;a more complex Example

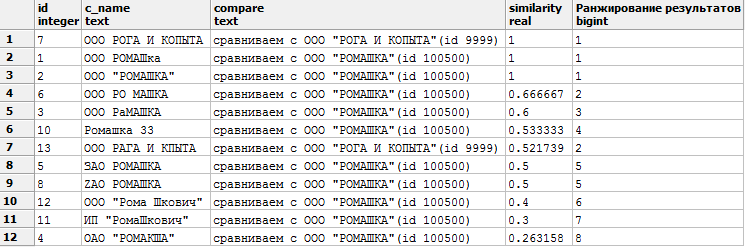

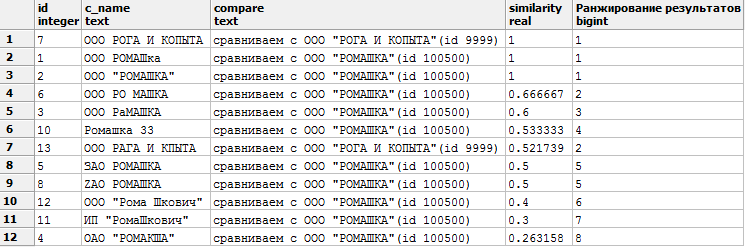

Get this result:

WITH company (id,c_name) AS ( -- input data table

VALUES (1, 'OOO Daisy')

,(2, 'OOO "ROMASHKA"')

,(3, 'OOO Ramashka')

,(4, 'JSC "ROMAXA"')

,(5, 'JSC DAISY')

,(6, 'OOO RO MASHKA')

,(7, 'OOO BARNYARD')

,(8, 'ZAO DAISY')

,(9, 'How did this get here?')

,(10, 'Daisy 33')

,(11, 'SP "Romashkevich"')

,(12, 'OOO "Roma Skovic"')

,(14, 'FE "Roma Skovic"')

,(13, 'OOO RAGA AND CAPITA')

),

compare (id, need) -- AS our base for comparison

(VALUES (100500, 'OOO "ROMASHKA"')

,(9999, 'OPEN COMPANY "HORNS AND HOOFS"')

)

SELECT c1.id, c1.c_name, 'compare with' || c2.need, similarity(c1.c_name, c2.need)

,dense_rank() OVER (PARTITION BY c2.need ORDER BY similarity(c1.c_name, c2.need) DESC)

AS "result Ranking" -- window functions, it is described below

FROM company c1 CROSS JOIN c2 compare

WHERE similarity(c_name, c2.need) >0.25 -- values from 0 to 1, the closer to 1, the more accurate the match

ORDER BY similarity DESC;Get this result:

Sortable similarity DESC. The first results we see the most similar row (1 is perfect similarity).

Optionally print the value of similarity SELECT, you can simply use it in the WHERE clause similarity(c_name,', OOO "ROMASHKA"') >0.7

and ask yourself suits us the option.

PS I would be grateful if you tell me what are some other ways of mapping the text data. Tried to remove regular expressions everything except the letters/numbers, and compare for equality, but this option does not work if there are typos.

the

9. The window function OVER() (PARTITION BY __ ORDER BY __ )

Almost described in your draft this is a very powerful tool, found

the

10. Multiple template LIKE

Task. You want to filter the list of users whose names must conform to certain templates.

As always, will present the simplest example:

the

-- Create table with data

CREATE TEMP TABLE users_tst (id, u_name)

AS (VALUES (1::INT, NULL::VARCHAR(50))

,(2, 'Juliana H.')

,(3, 'Simon I.')

,(4, 'Victoria T')

,(5, 'Olga S.')

,(6, 'Elizabeth I.')

,(7, 'Nicholas H.')

,(8, 'Isaac R.')

,(9, 'Elisha A.')

);Have a query that performs its function, but becomes cumbersome when a large number of filters.

the

SELECT * FROM users_tst

WHERE u_name LIKE'%'

OR u_name LIKE '%AA%'

OR u_name LIKE 'Juliana H.'

OR u_name LIKE 'Elisha%'

-- etcWill show you how to make it more compact:

the

SELECT * FROM users_tst

WHERE u_name LIKE ANY (ARRAY ['%', '%AA%', 'Juliana H.', 'Elisha%'])You can do interesting tricks, using a similar approach.

Write in the comments if you have any thoughts on how to rewrite the original query.

the

11. Some useful functions

NULLIF(a,b)

There are situations when a certain value should be interpreted as NULL.

For example, a zero-length string ( " — empty string) or zero(0).

You can write CASE, but more concise to use the NULLIF function which has 2 parameters, with the equality which returns NULL, otherwise prints out the original value.

the

SELECT id

,param

,CASE WHEN param = 0 THEN NULL ELSE -- param END the decision through a CASE

,NULLIF(param,0) -- solution using NULLIF

val

FROM(

VALUES( 1, 0, 'IN the column on the left was 0' )

) AS tst (id,param,val);COALESCE selects the first not NULL value

the

SELECT COALESCE(NULL,NULL,-20,1,NULL,-7); --choose -20GREATEST selects the maximum value of the following

the

SELECT GREATEST(2,1,NULL,5,7,4,-9); --choose 7LEAST selects the minimum value of the following

the

SELECT LEAST(2,1,NULL,5,7,4,-9); -- choose -9 PG_TYPEOF shows the data type of the column

the

SELECT pg_typeof(id), pg_typeof(arr), pg_typeof(NULL)

FROM (VALUES ('1'::SMALLINT, array[1,2,'3',3.5])) AS x(id,arr);

- will show smallint, numeric [], and unknown, respectively IT stopping unwanted processes in a database

the

SELECT pid, query, * FROM pg_stat_activity -- table process database. In older versions of postgres column PID was called PROCPID

WHERE state <> 'idle' and pid < > pg_backend_pid(); -- exclude connection and its just a process called

SELECT pg_terminate_backend(PID); /* substitute in here the PID of the process which we want to stop, unlike commands, send a gentle signal to end, which may not always kill the process*/

SELECT it(PID); /* substitute in here the PID of the process which we want to stop. Almost guaranteed kills the query, something like KILL -9 in LINUX */

Read more in the manual

PS

Attention! In no case do not kill the hung process using the console KILL -9 or task Manager.

This can lead to the collapse of the database, loss of data and a long automatic recovery of the database.

SELECT it(pid) FROM pg_stat_activity -- for example kill all the process

WHERE state <> 'idle' and pid < > pg_backend_pid();Attention! In no case do not kill the hung process using the console KILL -9 or task Manager.

This can lead to the collapse of the database, loss of data and a long automatic recovery of the database.

the

12. Escaping characters

Start with the basics.

In SQL, string values are enclosed in ' an apostrophe (single quote).

Numeric values are not framing apostrophes, and to separate the fractional part you need to use a period, since the comma is interpreted as separator

the

SELECT 'My text', 365, 567.6, 567,6 the result:

All is well, until not want to output the apostrophe character '

For this there are two methods of screening (known to me)

the

SELECT 1, 'Apostrophe," and two apostrophes in a row "" ' -- escaping double writing "

UNION ALL

SELECT 2, E Apostrophe \' and two apostrophes in a row, \ ' \ '' -- escaping with a backslash, , English letter E before the first quotation mark is necessary so that the \ character was perceived as an escape characterthe result is the same:

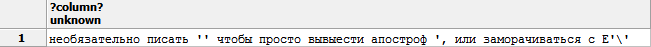

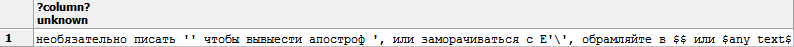

In PostgreSQL there is a more convenient way to use the data without escape characters. In framed by two dollar signs $$ line, you can use almost any character.

Example:

the

select $$is optional to write " just to pull out an apostrophe ', or to bother with E'\' $$receive the data in its original form:

If this is not enough, and inside you want to use two dollar symbol in a row $$, Postgres allows you to specify a "delimiter". It is only between two dollars to write your text, for example:

the

select $uniq_tAg$ is not necessary to write " just to pull out an apostrophe ', or to bother with E'\', bramlette $$ or $any_text$ $uniq_tAg$See our text:

This method has opened not so long ago, when I began to study writing functions.

the

Conclusion

We hope this information will help you to learn a lot of new beginners and "srednicki". I myself am not a developer, but I can only call myself a fan of SQL, so how to use the techniques is up to You.

I wish you success in learning SQL. Waiting for comments and thanks for reading!

Comments

Post a Comment