Megaspectacle "Eurointegral"

hi abrowser. In this post I want to tell you about a project I was working on last 4 months.

/ > We are talking about technological megaspectacle, which was created in the framework of the new stage of the Alexandrinsky theatre. The main characters of the play are emotional state and thoughts of the performer. For a start, the official description of social. networks:

"Eurointegral" is the first theater project implemented on the basis of the media center of the New stage of the Alexandrinsky theatre.

For those who are interested, I ask under kat. Exclusive abrusio will be a few photos of the work process.

the

Megaspectacle "Eurointegral" raises the question of options for human interaction, computer and interfaces, role-playing range of each of them.

Sound and image on stage are generated in real time is specially written for the play software. They specify a dynamic system of coordinates against which examines the events, coincidences, dynamics and drama. Control performance is the emotional state of the performer, which, by means of energy-informational exchange with a computer, controls the sound and visual media. The reaction of the performer of an audiovisual event, the source of which is he himself, going biofeedback.

the

To read the emotional state of the performer uses the interface Emotiv Epoc Research Edition. Its SDK allows to obtain the parameters of emotional States such as excitement and frustration, and also learn several mental commands that can be assigned to control any parameters of the system. It is possible naguglit, for example, as a disabled person controls the chair with my mind. In the photo below performer Sasha Rumyantsev makes moving 3D cube while training. Also on the screen you can notice graphics with the readings of electrical potentials of the brain, taken in real time.

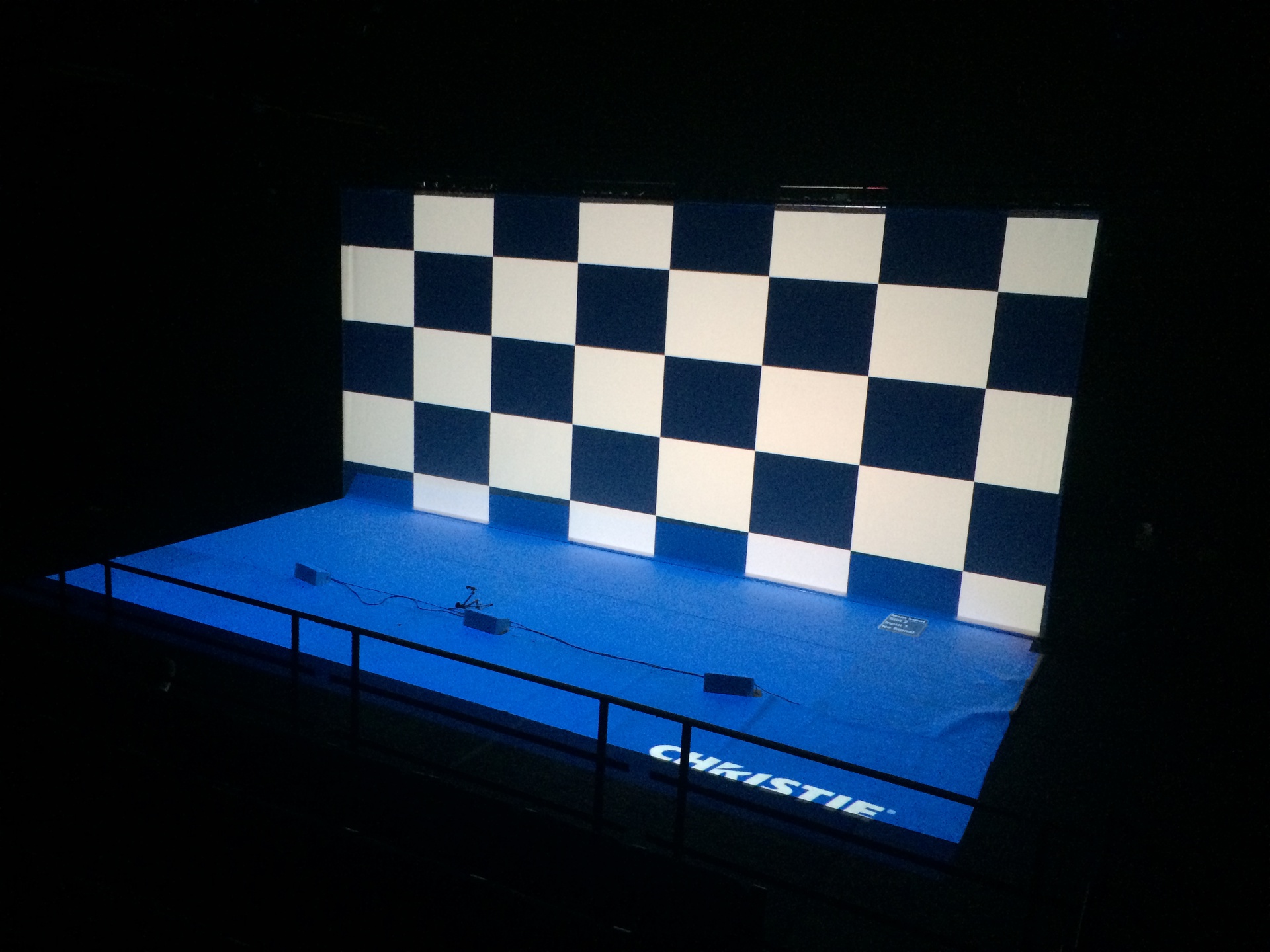

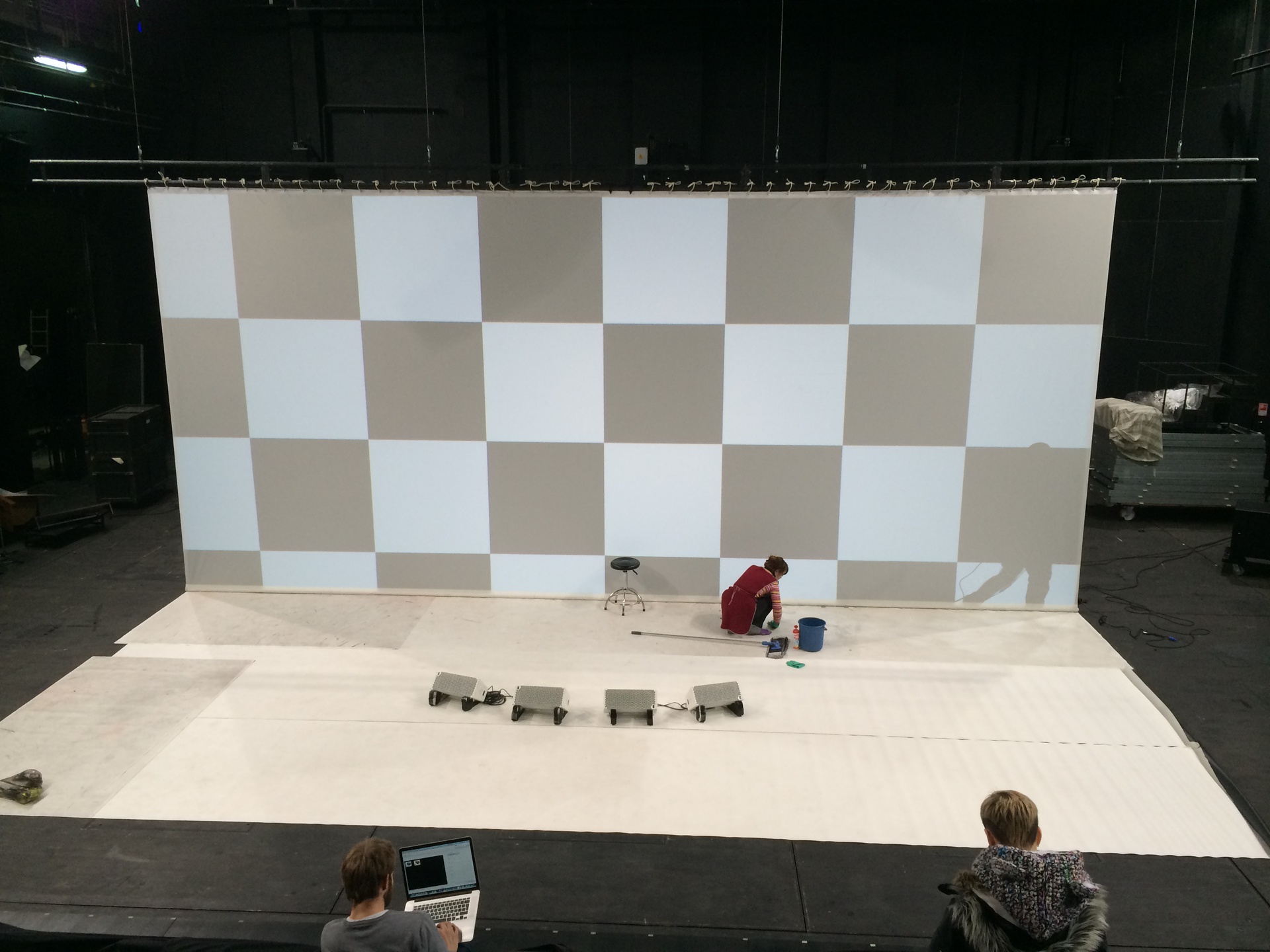

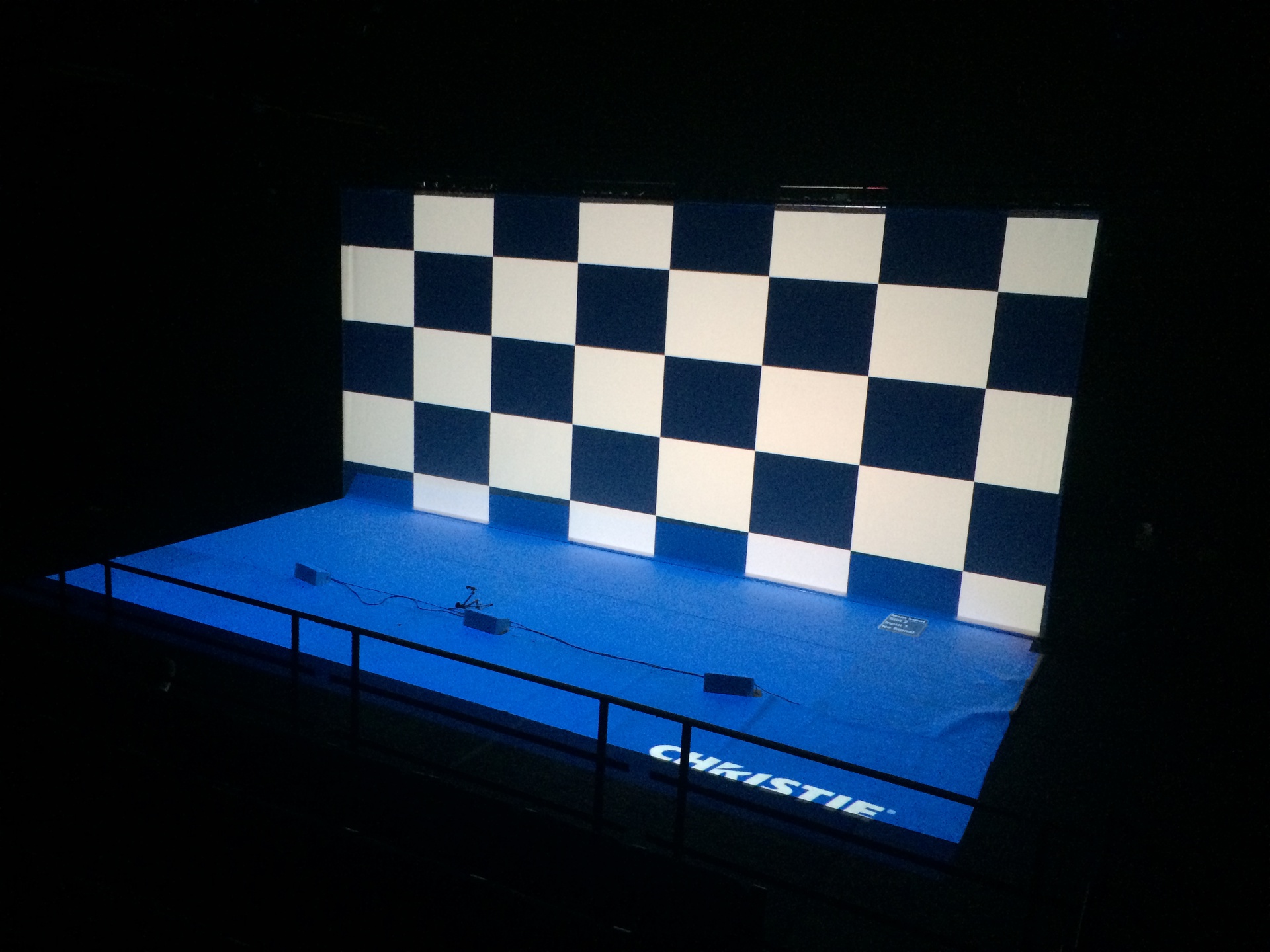

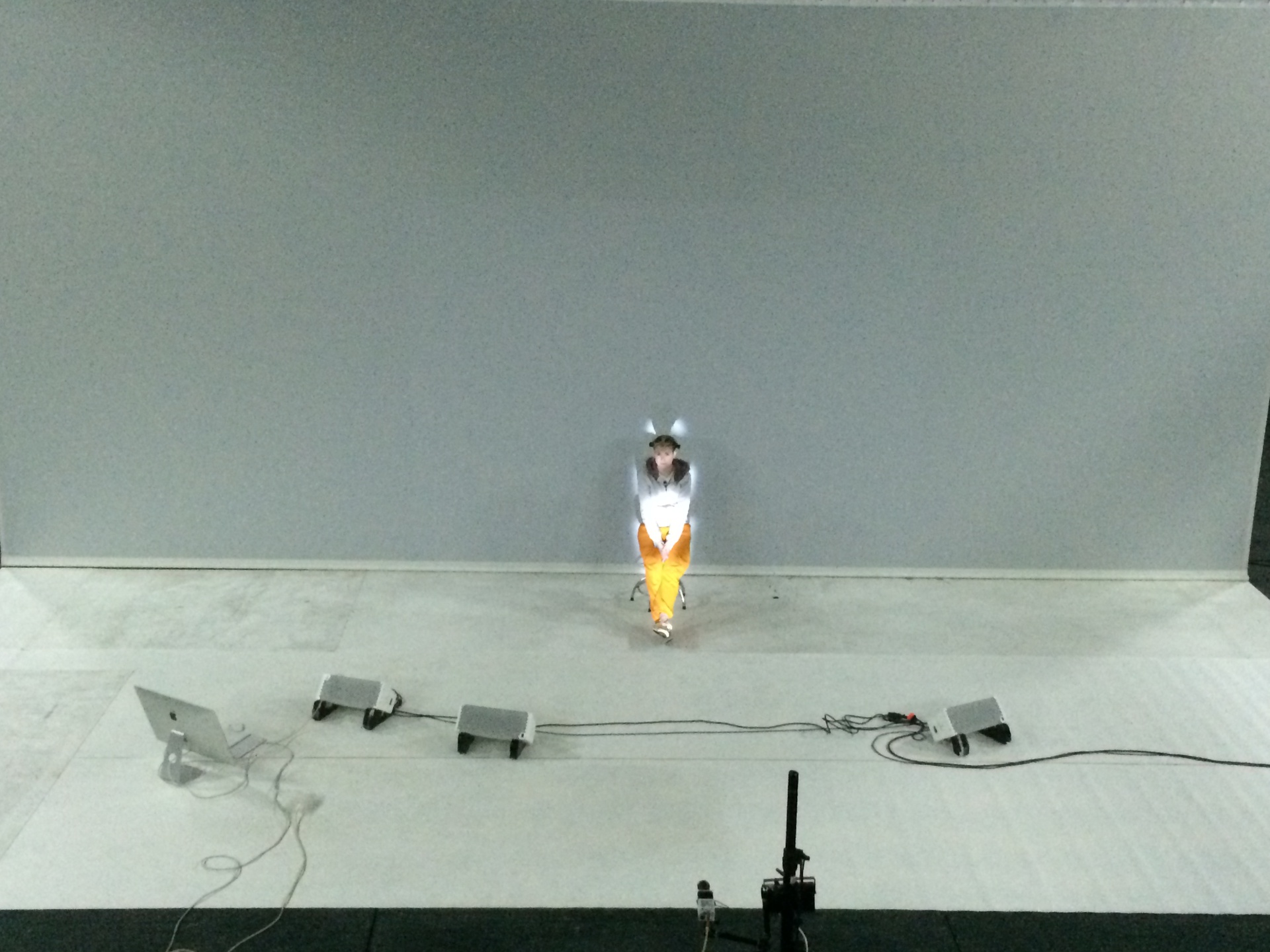

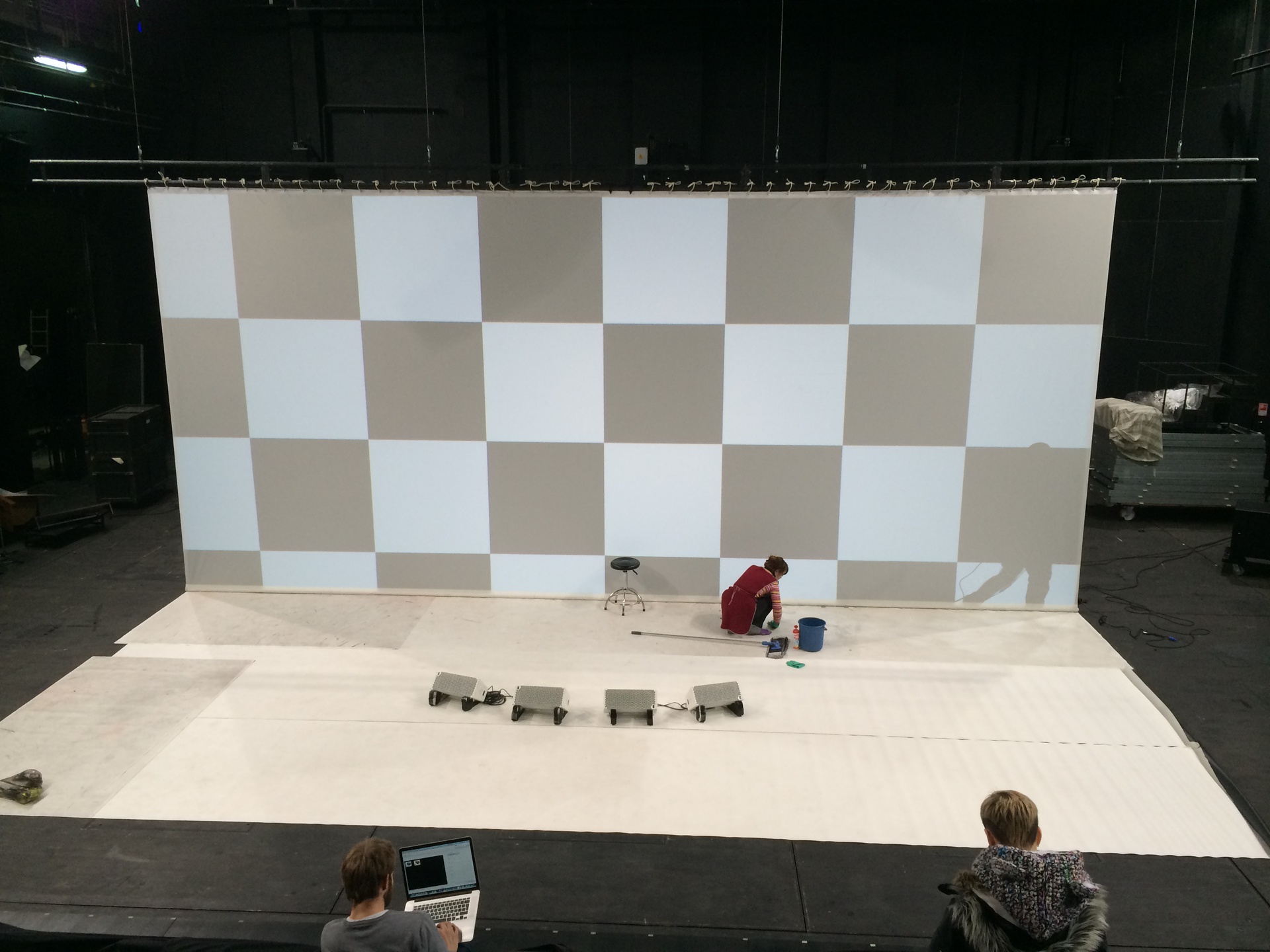

The stage itself consists of two screens, the size of 10х4,5 meters, for the projections of the graphs (I have already said that we do not use przepisanie video and graphics generated in realtim?), a separate projection on the silhouette of a performer, 14 channels of audio, 2 subwoofers, IR camera, Kinect, and a lighting fixture Clay Paky A. leda Wash K20, which is interactively controlled by the head of Sasha in one of the scenes. All this stuff is controlled by four computers: 2 PC to generate projections (on the floor, rear projection screen, and the silhouette of Sasha), 1 PC (or iMac with Windows installed) to work with the interface and the kinect, and 1 Mac to generate sound. And Yes, the sound is also generated in real time, including surround pieces.

the

Will tell a little more about the technical side. Software for graphics was written in C++ using OpenFrameworks framework. For tasks associated with backlighting of the silhouette of the performer uses OpenCV, which is processed by the image coming from the IR camera. Below are photo where you can see the analog camera and IR illuminator:

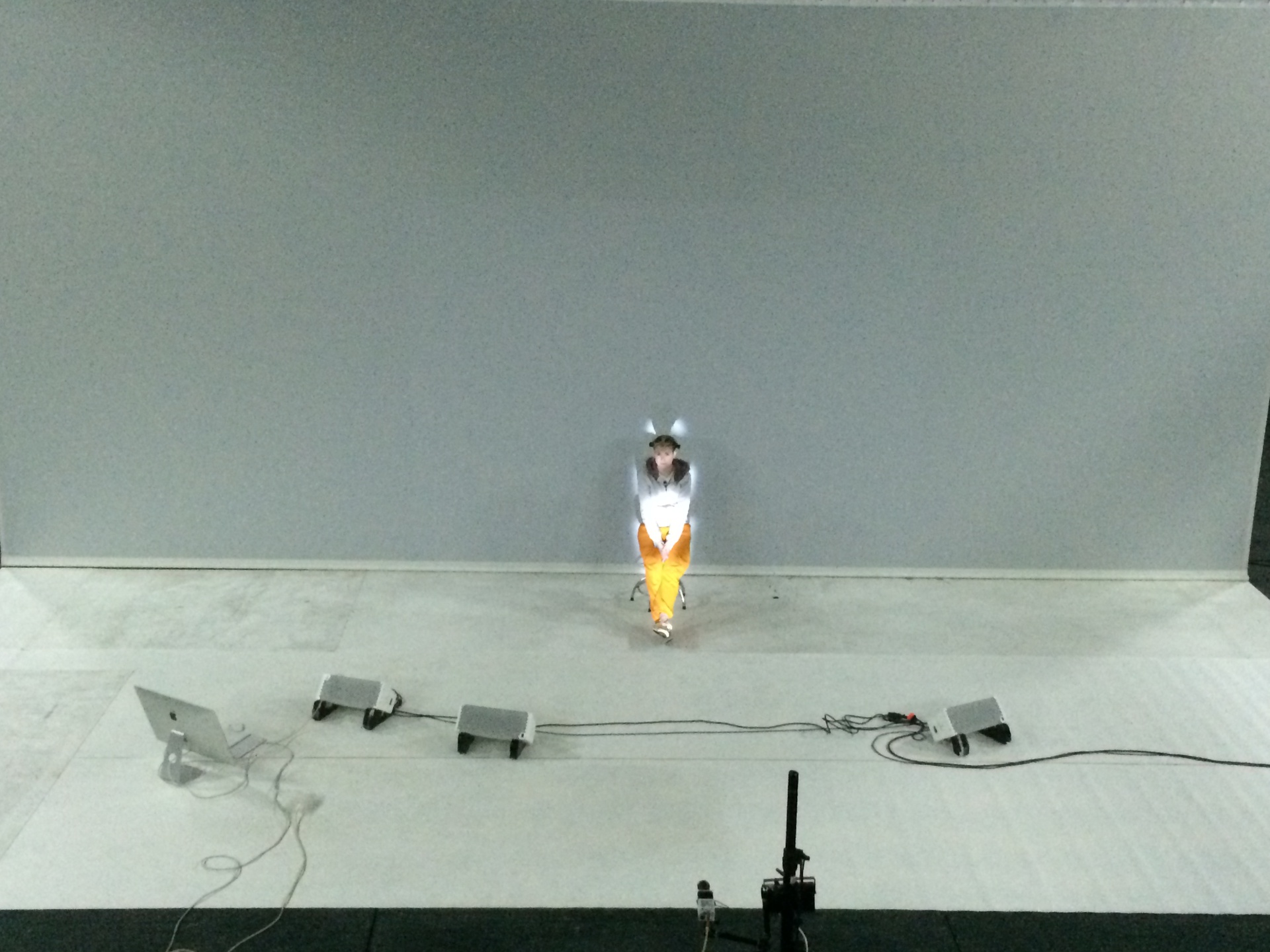

Below you can see how the backlight of the silhouette. Unevenness of illumination (upper part of the body is not highlighted) is that "home" clothes Sasha poorly reflects IR radiation. For the play, of course, sewed a special suit made of cloth, which has been approved by we, the programmers.

Kinect used to recognise some of the gestures:

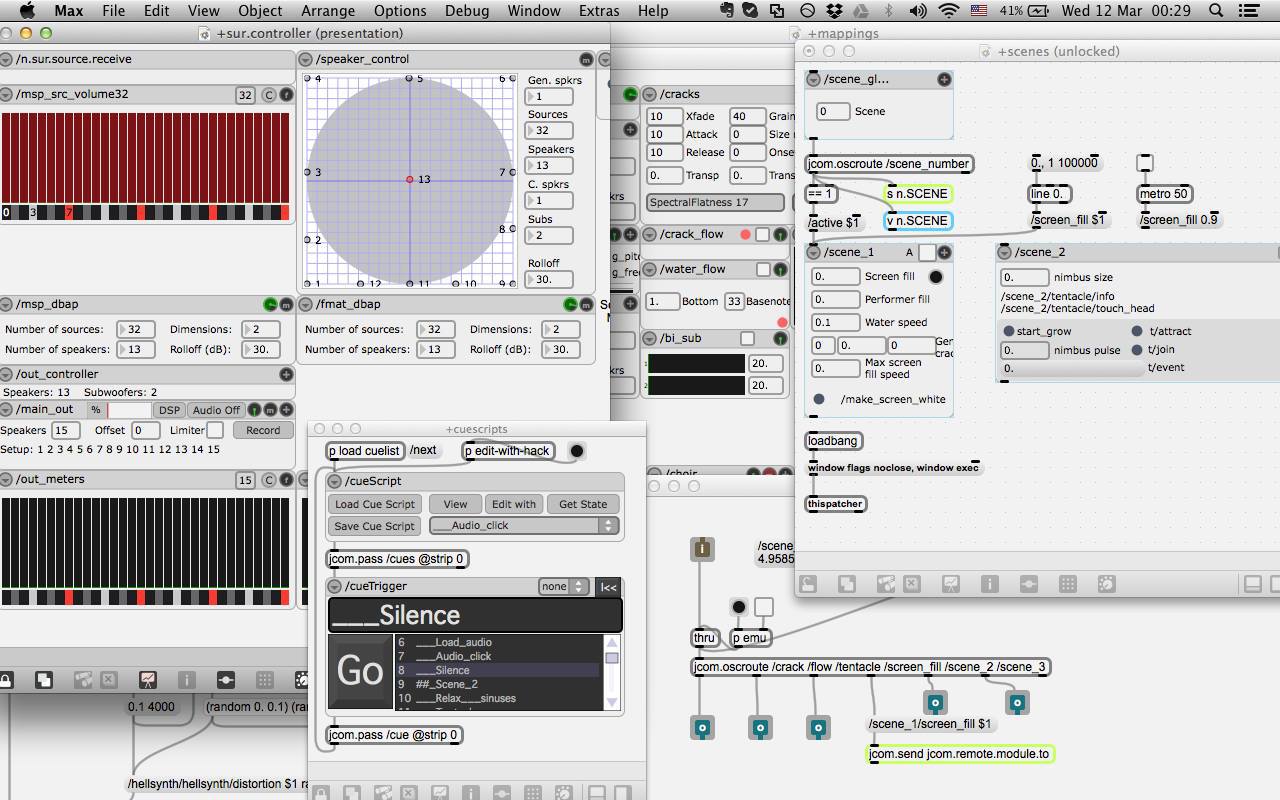

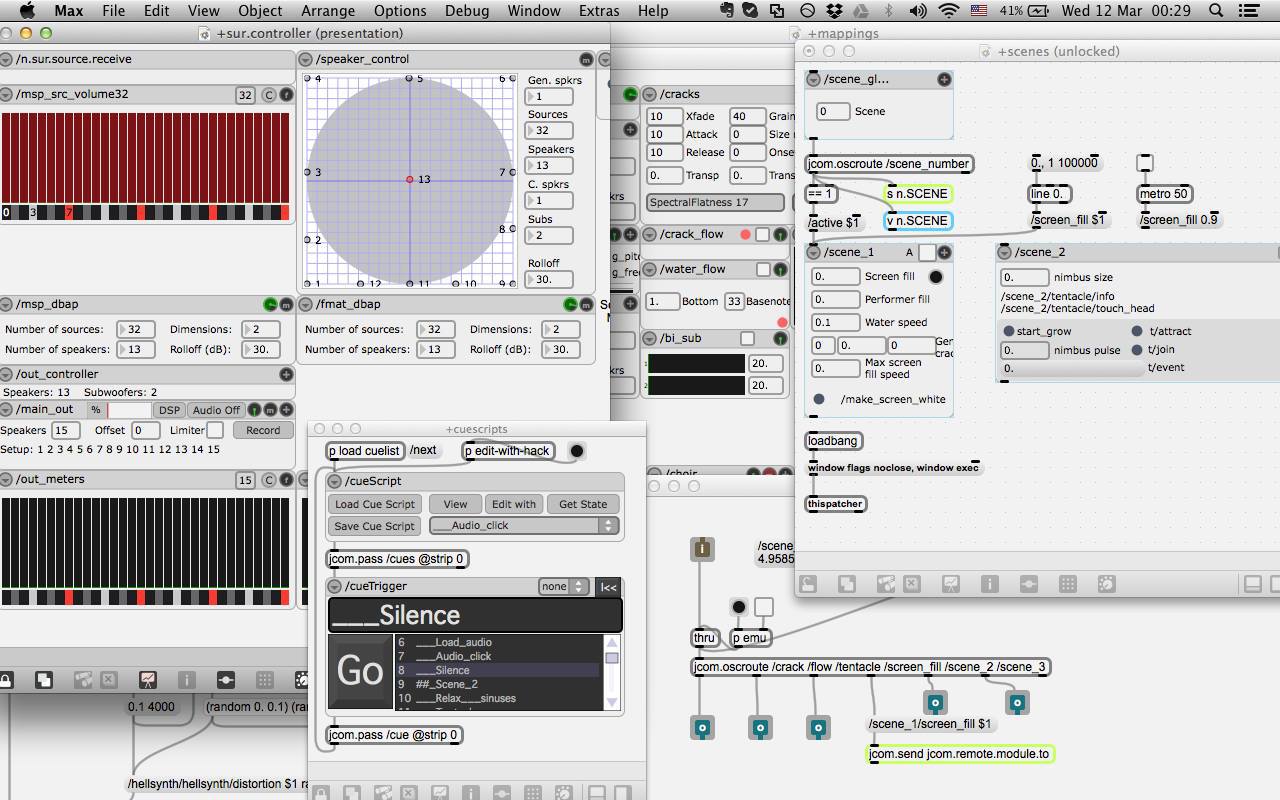

Let's move on to the sound. The soft sound made in Max/MSP. This is the environment of visual programming to create interactive audiovisual applications. The history of Max/MSP starts in the late 80-ies in the notorious Parisian Institute of research and coordination of acoustics IRCAM (I once upon a time wrote overview of this environment). Sound patch "Eurointegral" looks like this:

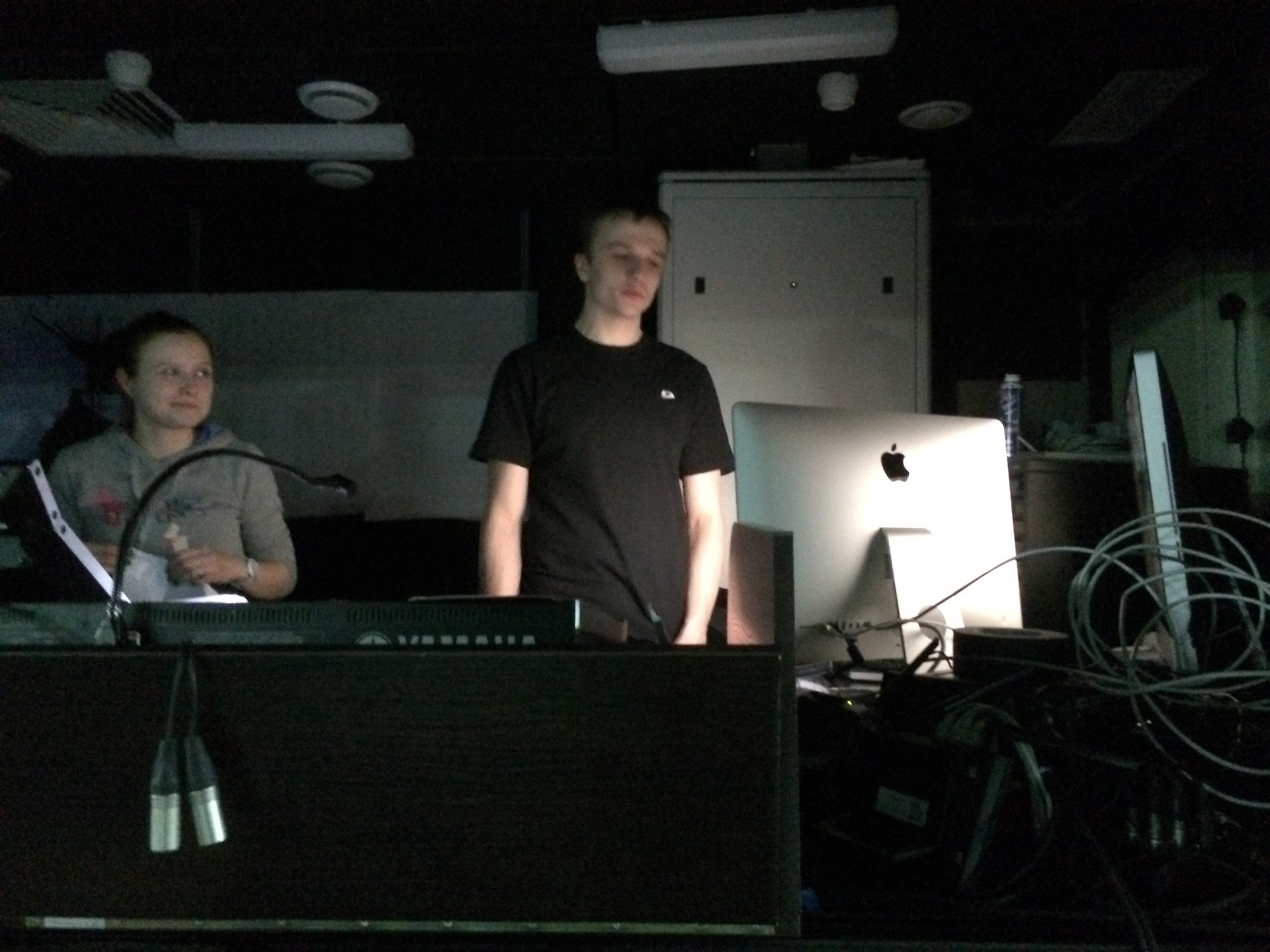

From this patch the sound via optical using the RME MADIface is sent to the mixer Yamaha M7CL. I am Mary, a very sensible girl-sound of the theater:

If you noticed in an earlier photo, in the center of the stage are also speakers. This "trick" dates back to the "Acousmonium", developed by pupils of Pierre Schaeffer in the 70-ies in pariscom the center of GRM (by the way, at the end of June at the media Studio of the theater planned for the workshop the author of the Austrian Acousmonium Thomas Gorbach). It allows you to create the sensation that the sound goes to the center of the stage.

In addition, the sound performance is fundamentally spatial, it is also interactive. No Ableton and audio players. In order to achieve this, from the graphical program is continuously transmitted, the information that is drawn at the moment, and on the basis of this is already happening on a scoring graphic. For example, tell you a secret, in the first scene draws a large number of identical graphics. And for each such object, according to the network sends information on its location and growth — all ispolzuetsa in order to separately articulate each element and send it to the corresponding point of the spatial pattern of the sound. Sound also affects the condition of the performer, which is already affecting the schedule.

the

The event starts at 19:30 and consists of three parts: 1) screening of the film about working on the show, 2) the performance itself, and 3) an open discussion where you can ask any questions to the authors.

In addition, before each event at 18:00 in the foyer of the new stage will be a new lecture on technological art. The vrod to the lecture is free.

The next screenings will be held on 3, 4 and 5 April at the address: SPb, Fontanka, 49A.

It is already known that the 3rd number will be the lecture of Love Bugayeva "Neurocinema and neiromediator". Follow the link you will be able to sign up for the event.

Pay attention, in the play there are loud sound and stroboscopic effects, so epileptics to attend the event is not recommended.

The group's performance in the social. networks: vk, facebook.

the

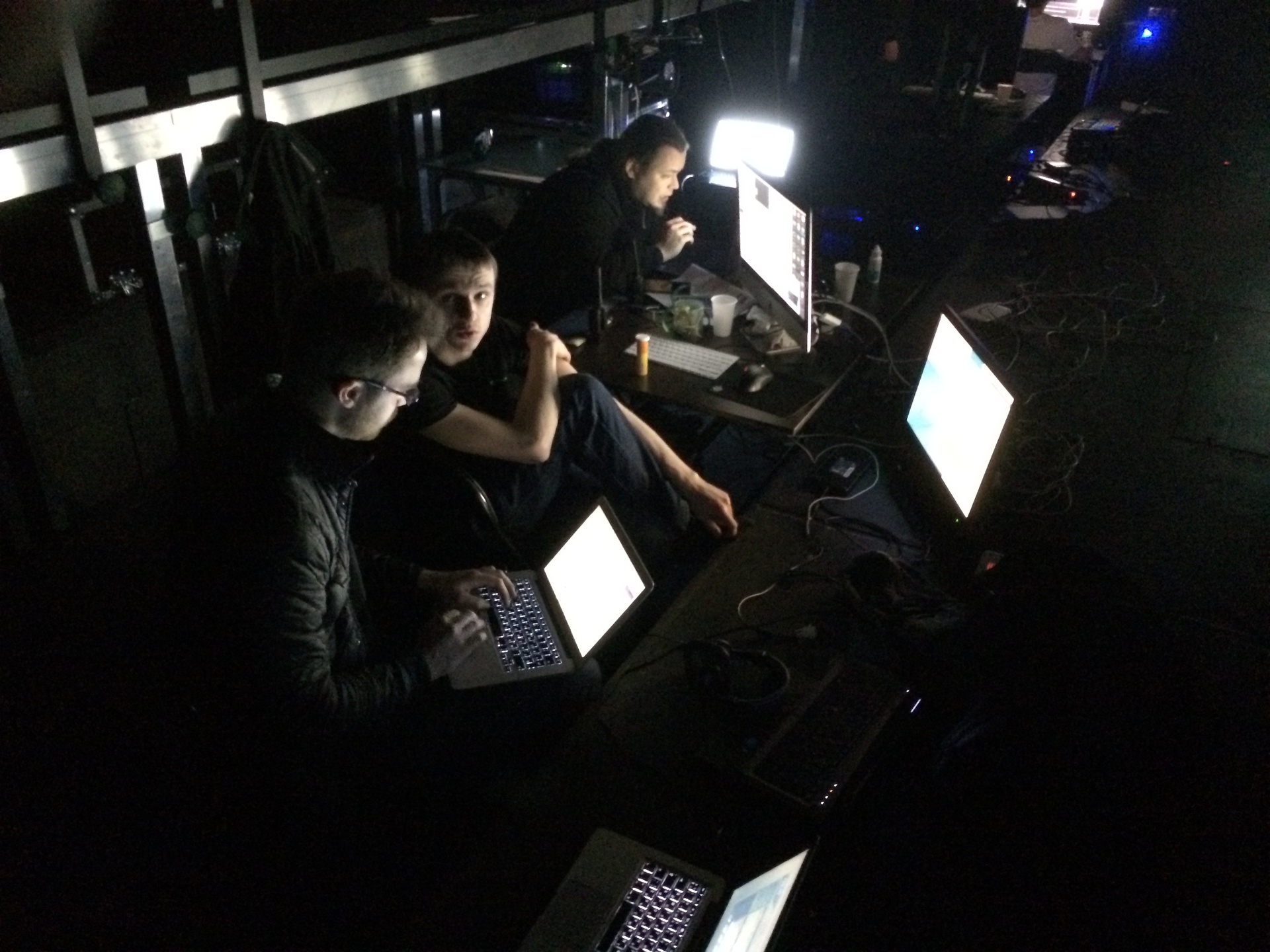

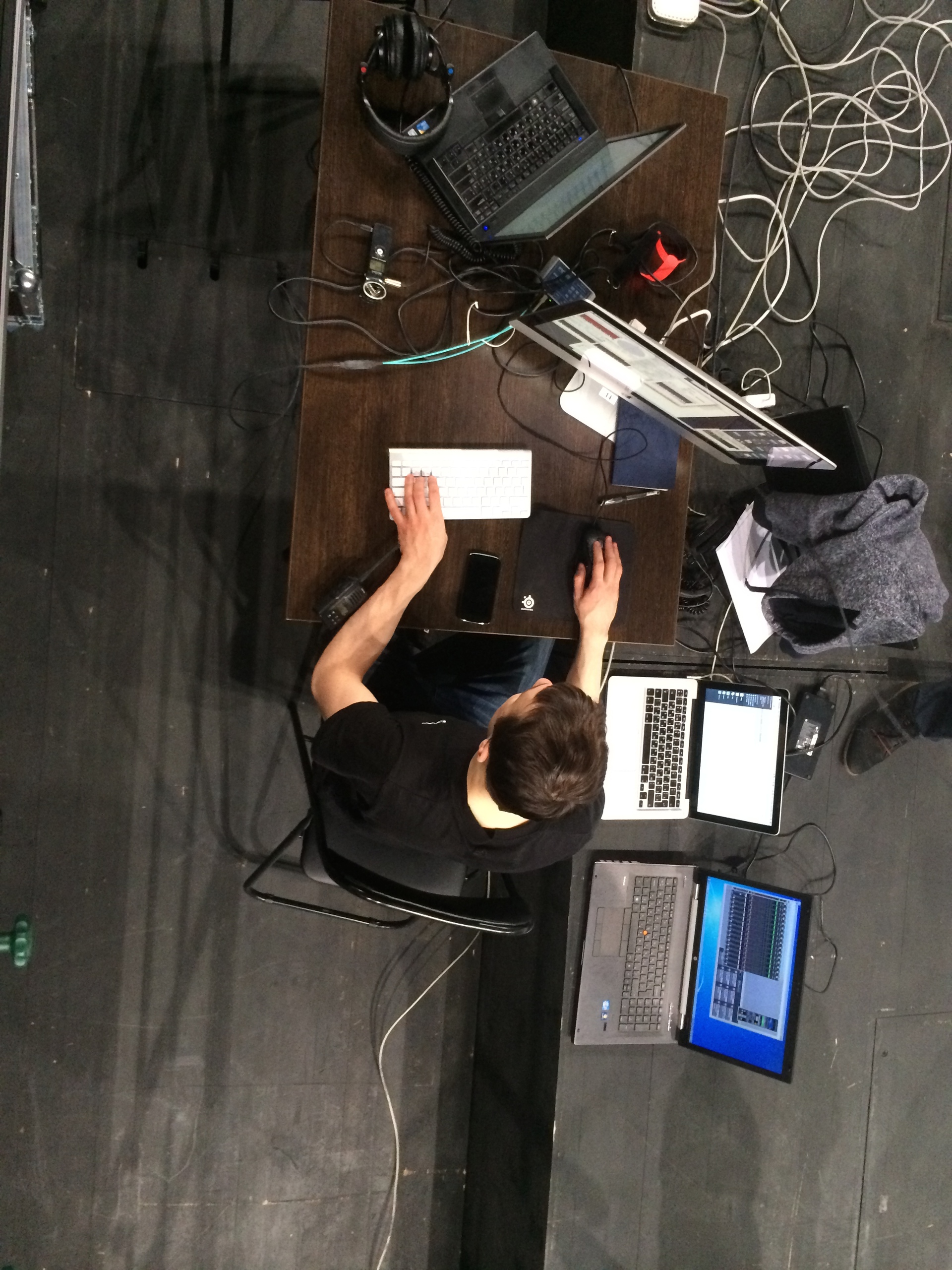

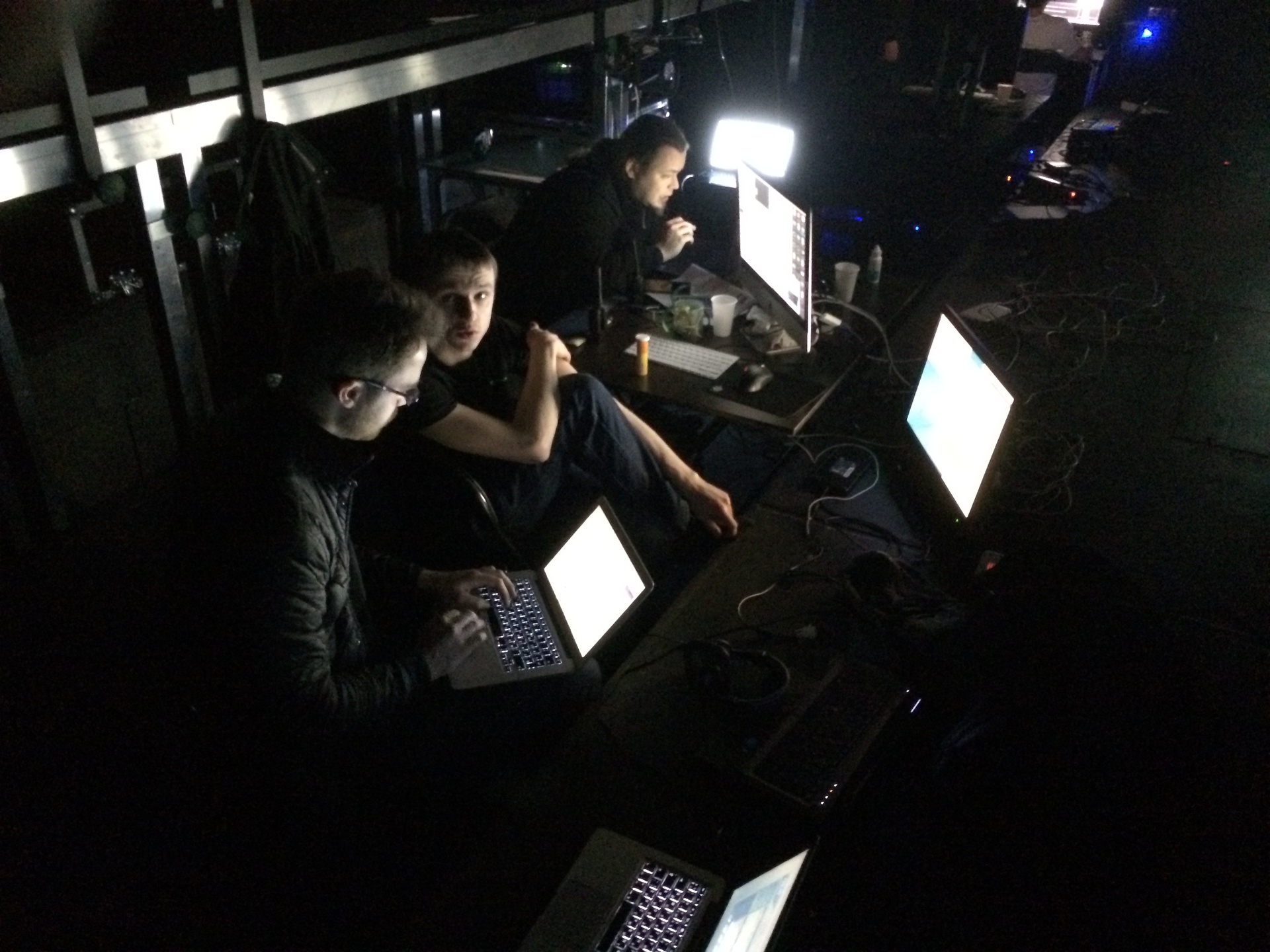

To Habra post some photos of the process.

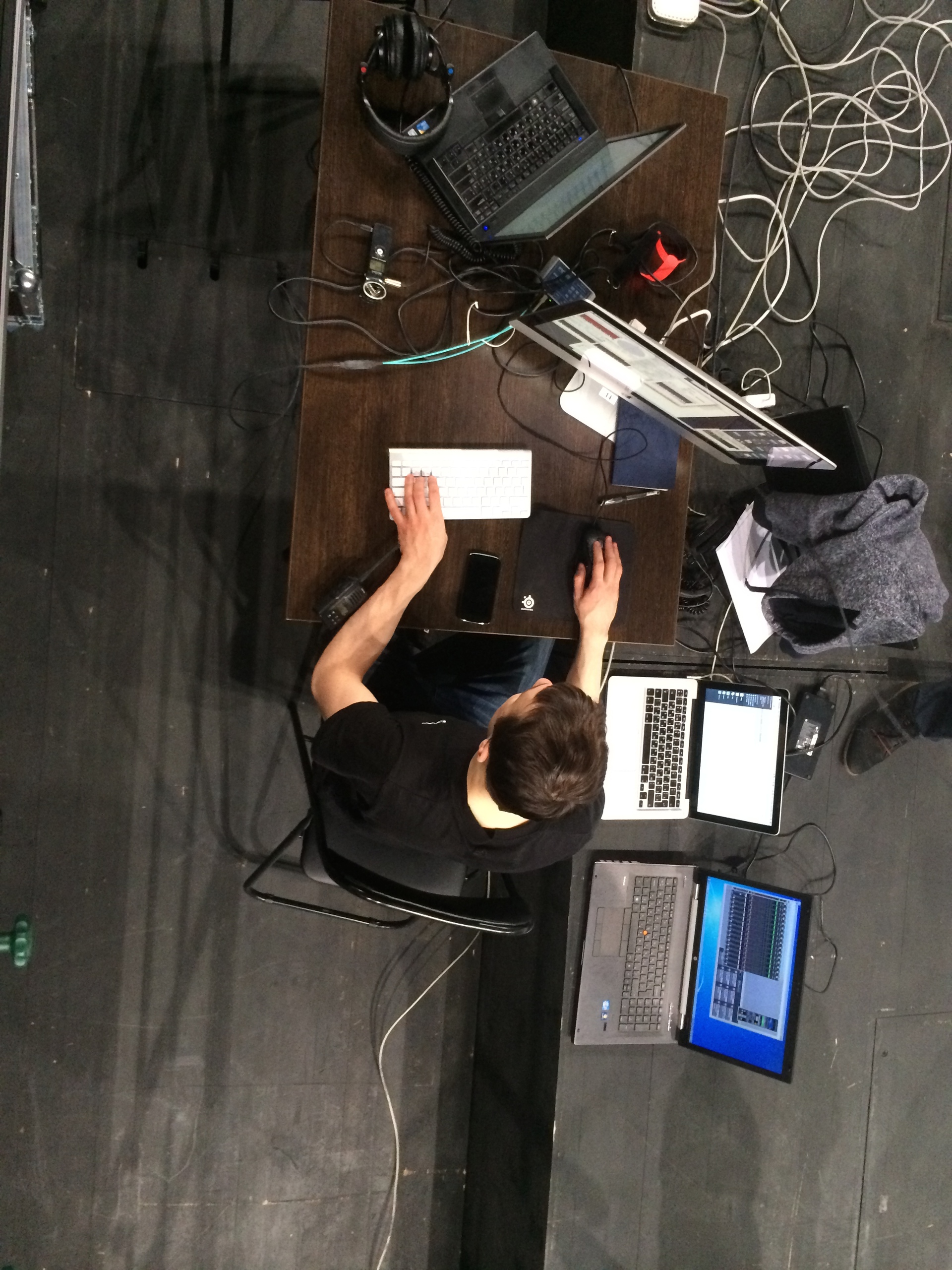

On the left sits my friend, a specialist in computer networks, configures the network to control the light devices programmatically on the ArtNet Protocol:

Below I and Alex Oleynikov. Sit, removing his Slippers, and warmed the legs on the battery:

Article based on information from habrahabr.ru

/ > We are talking about technological megaspectacle, which was created in the framework of the new stage of the Alexandrinsky theatre. The main characters of the play are emotional state and thoughts of the performer. For a start, the official description of social. networks:

Megaspectacle "Eurointegral" is an experimental project, which is rare in today's domestic scene, the direction of science art, scientific art of using and interpreting the achievements of modern technology. The show, created under the guidance of the famous St. Petersburg media artist Yury Didevich, explores one of the key themes of the culture of the turn of the century — the interaction of man and machine: the attempt to establish a cultural dialogue between them — swersie "Eurointegral". The play uses the biopotentials of the brain of the artist (electroencephalogram, EEG) for control of audio-visual algorithms performances.

"Eurointegral" is the first theater project implemented on the basis of the media center of the New stage of the Alexandrinsky theatre.

For those who are interested, I ask under kat. Exclusive abrusio will be a few photos of the work process.

the

the bottom line

Megaspectacle "Eurointegral" raises the question of options for human interaction, computer and interfaces, role-playing range of each of them.

Sound and image on stage are generated in real time is specially written for the play software. They specify a dynamic system of coordinates against which examines the events, coincidences, dynamics and drama. Control performance is the emotional state of the performer, which, by means of energy-informational exchange with a computer, controls the sound and visual media. The reaction of the performer of an audiovisual event, the source of which is he himself, going biofeedback.

the

What represent a "Eurointegral"

To read the emotional state of the performer uses the interface Emotiv Epoc Research Edition. Its SDK allows to obtain the parameters of emotional States such as excitement and frustration, and also learn several mental commands that can be assigned to control any parameters of the system. It is possible naguglit, for example, as a disabled person controls the chair with my mind. In the photo below performer Sasha Rumyantsev makes moving 3D cube while training. Also on the screen you can notice graphics with the readings of electrical potentials of the brain, taken in real time.

The stage itself consists of two screens, the size of 10х4,5 meters, for the projections of the graphs (I have already said that we do not use przepisanie video and graphics generated in realtim?), a separate projection on the silhouette of a performer, 14 channels of audio, 2 subwoofers, IR camera, Kinect, and a lighting fixture Clay Paky A. leda Wash K20, which is interactively controlled by the head of Sasha in one of the scenes. All this stuff is controlled by four computers: 2 PC to generate projections (on the floor, rear projection screen, and the silhouette of Sasha), 1 PC (or iMac with Windows installed) to work with the interface and the kinect, and 1 Mac to generate sound. And Yes, the sound is also generated in real time, including surround pieces.

the

Tools

Will tell a little more about the technical side. Software for graphics was written in C++ using OpenFrameworks framework. For tasks associated with backlighting of the silhouette of the performer uses OpenCV, which is processed by the image coming from the IR camera. Below are photo where you can see the analog camera and IR illuminator:

Below you can see how the backlight of the silhouette. Unevenness of illumination (upper part of the body is not highlighted) is that "home" clothes Sasha poorly reflects IR radiation. For the play, of course, sewed a special suit made of cloth, which has been approved by we, the programmers.

Kinect used to recognise some of the gestures:

Let's move on to the sound. The soft sound made in Max/MSP. This is the environment of visual programming to create interactive audiovisual applications. The history of Max/MSP starts in the late 80-ies in the notorious Parisian Institute of research and coordination of acoustics IRCAM (I once upon a time wrote overview of this environment). Sound patch "Eurointegral" looks like this:

From this patch the sound via optical using the RME MADIface is sent to the mixer Yamaha M7CL. I am Mary, a very sensible girl-sound of the theater:

If you noticed in an earlier photo, in the center of the stage are also speakers. This "trick" dates back to the "Acousmonium", developed by pupils of Pierre Schaeffer in the 70-ies in pariscom the center of GRM (by the way, at the end of June at the media Studio of the theater planned for the workshop the author of the Austrian Acousmonium Thomas Gorbach). It allows you to create the sensation that the sound goes to the center of the stage.

In addition, the sound performance is fundamentally spatial, it is also interactive. No Ableton and audio players. In order to achieve this, from the graphical program is continuously transmitted, the information that is drawn at the moment, and on the basis of this is already happening on a scoring graphic. For example, tell you a secret, in the first scene draws a large number of identical graphics. And for each such object, according to the network sends information on its location and growth — all ispolzuetsa in order to separately articulate each element and send it to the corresponding point of the spatial pattern of the sound. Sound also affects the condition of the performer, which is already affecting the schedule.

the

When and where

The event starts at 19:30 and consists of three parts: 1) screening of the film about working on the show, 2) the performance itself, and 3) an open discussion where you can ask any questions to the authors.

In addition, before each event at 18:00 in the foyer of the new stage will be a new lecture on technological art. The vrod to the lecture is free.

The next screenings will be held on 3, 4 and 5 April at the address: SPb, Fontanka, 49A.

It is already known that the 3rd number will be the lecture of Love Bugayeva "Neurocinema and neiromediator". Follow the link you will be able to sign up for the event.

Pay attention, in the play there are loud sound and stroboscopic effects, so epileptics to attend the event is not recommended.

The group's performance in the social. networks: vk, facebook.

the

the process

To Habra post some photos of the process.

On the left sits my friend, a specialist in computer networks, configures the network to control the light devices programmatically on the ArtNet Protocol:

Below I and Alex Oleynikov. Sit, removing his Slippers, and warmed the legs on the battery:

Comments

Post a Comment